Error: 2LIS_11_VASCL yellow status for a long time

Infosource: 2LIS_11_VASCL

( Sales Order Schedule Line (As of 2.0B) )

Infopackage: 2LIS_11_VASCL_DELTA

Error: Yellow

status for a long time

Action:

1. Double click Details tab, there is a data packet which is

yellow. It may be hanged.

2. Double click Status tab.

Double click

traffic light of Total.

Click Yes.

Click red traffic light and Save

Click Yes.

Status of Total becomes red.

3. Click the data packet which is yellow as the following:

Data Packet 2 : Arrived in BW ;

Processing : This data packet was not updated in all data target

Right click and select Manual

update.

Processing will go on in the

background. When it is done, the data packet will turn green. If more than one

packet is yellow, sometimes the procedure needs to be repeated as case by case

basis.

When all data packets are green,

the status light in the right hand side should be green. If not the manual

turning of Total status light should be undone by resetting back the Total

status light to ‘Delete status, back to request status’.

Double click Status tab.

Double click traffic light of

Total.

Click Yes and Click ‘Delete status, back to request status’

in Set Overall status box and save.

Error: 8YGTDS_C30 – Archive Process overdue

Infopackage: 8YGTDS_C30 – Archive

Error: Process overdue

Action:

In the monitor, details tab, right mouse click on the red

data packet and manual update

Some times, the system will let you update manual when right

click on the data packet which is red. Some times, the message popup and said

can not update request, it is in a data target. Then follow as below.

Double click on the data target. Eg. YGTDS_C31.

In the Manage Data Target, select the request and click

delete button.

When the request is deleted,

arrow back to the monitor.

In the Details tab, right click the packet and click manual

update. Usually all data packet will be

process in the background and turn green.

Error: 0EMPLOYEE Error when writing in PSA

Infosource: 0EMPLOYEE

Infopackage: YGTHR_0EMPLOYEE_ATTR

Error: Error when writing in PSA

1. Employee infopackage is part of the infopackage group

‘GTHR_Master_Data_Daily’ which is critical master data. Without this being

successfully complete, transaction data will not run.

2. Copy infopackage ‘YGTHR_0EMPLOYEE_ATTR’ from the Header tab and green arrow back to

BW Administrator Workbench: Modelling

3. Search the infopackage in the infopackage group. Once

found click arrow down to locate the infopackage group.

4. Double click on the infopackage group

‘GTHR_Master_Data_Daily’.

5. In the Schecule, click Jobs.

6. Click Execute .

7. High light the Job name which is released. Click Job on the

menu bar and select Schedule job and Repeat.

8. Unclick Periodic job and click immediate and save.

9. If refresh, the job will be running.

ASSIGN_TYPE_CONFLICT

This dump is very common with data load based on DTP (Data Transform Process). You can observe you failed load in DTP Monitor as follows:

If you clink on ABAP dump icon as above you go to TA ST22 (ABAP Runtime Error) to see dump.

It is caused by changed meta data of objects involved in this data loads. A process (mode) used for the runtime of BI objects has a certain and determined lifetime. Generated program (GP) which is serving for transformation (TRAN) between source and target objects of your load no longer match the metadata because the metadata changes during this lifetime. It means that you have recently changed something within your source and target objects (e.g. via TA RSA1) then you transported just some part of your changes recently but not whole data flow. Therefore your GP* program is referencing to „old“ runtime version of objects and they are already changed to „new“ version. As dumps says:

assign _rdt_TG_1_dp->* to <_yt_tg_1>.

To solve such a failed upload you need to regenerate your GP* program – this involves re-activate transformation. This can be a 1st step. If this doesn’t help you need to go deeper and re-activate and re-transport other objects involved in data flow like 1.source object of the DTP (DSO/DS/IS for example; depending in your particular flow);2. target object of the DTP (e.g. InfoCube/DSO/InfoProvider IO); 3. transformation; 4. the DTP as it self.

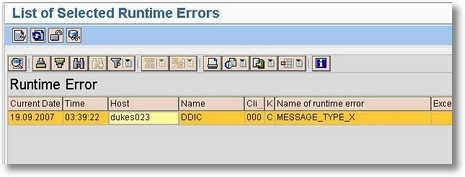

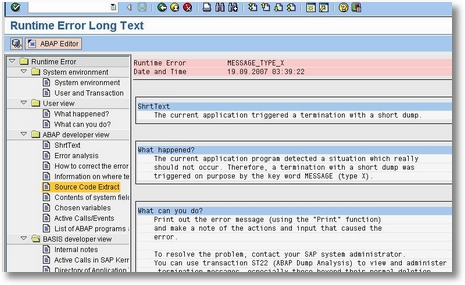

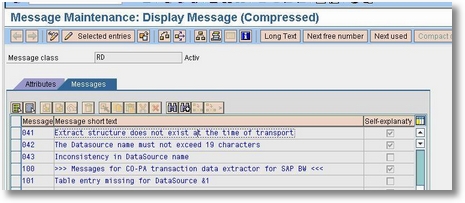

ST22 ( How to Check ABAP Dumps Message Type X)

Steps:

- Execute ST22 > Double click on any entries

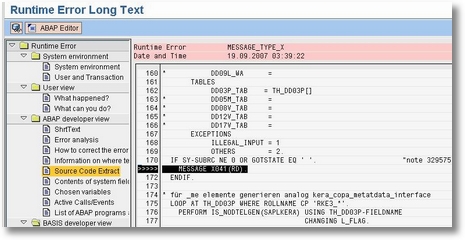

- Go to Source Code Extract

- Locate “>>>>” and you will see Message X041 in this example. Take note of the Message class and number.

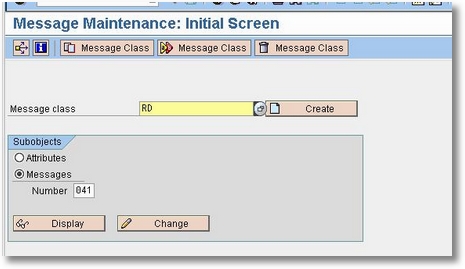

- Now open SE91. Enter the details we found from Source Code Extract in ST22:

-Message Class: RD

-Message Number: 041

Click Display

- Here, we determine what the message indicates

- Now we know that the ABAP Dump message type X is due Extract Structure does not exist at the time of transport. Informed the ABAP consultant or Function consultant about this issue.

DBIF_RSQL_SQL_ERROR

Some other terms that this issue may fall under are:

- RSEXARCA fails

- Archive job fails with ORA-01555

- ORA-01555: snapshot too old: rollback segment

- IDoc Archiving: Write Program fails

You schedule report RSEXARCA (the Archive Write job) for Idocs through SE38 or through transaction SARA for a very large number of Idocs and after a time, the program dumps with a DBIF_RSQL_SQL_ERROR short dump as seen in the screenshot below.

The short dump is caused by ORA-01555, which is related to Oracle (see note 185822). The error mostly happens, if a large transaction needs a large rollback segment. Rollback information that still has to be read was already overwritten, which means that a consistent read-only access can no longer be guaranteed.

From an ALE perspective, the best way to avoid this error is to use a smaller selection range when starting the report RSEXARCA. However, you may want to involve your Oracle team for deeper analysis from the database perspective.

If you cannot solve the problem yourself and want to send an error

notification to SAP, include the following information:

notification to SAP, include the following information:

1. The description of the current problem (short dump)

To save the description, choose “System->List->Save->Local File

(Unconverted)”.

(Unconverted)”.

2. Corresponding system log

Display the system log by calling transaction SM21.

Restrict the time interval to 10 minutes before and five minutes

after the short dump. Then choose “System->List->Save->Local File

(Unconverted)”.

Restrict the time interval to 10 minutes before and five minutes

after the short dump. Then choose “System->List->Save->Local File

(Unconverted)”.

3. If the problem occurs in a problem of your own or a modified SAP

program: The source code of the program

In the editor, choose “Utilities->More

Utilities->Upload/Download->Download”.

program: The source code of the program

In the editor, choose “Utilities->More

Utilities->Upload/Download->Download”.

4. Details about the conditions under which the error occurred or which

actions and input led to the error.

actions and input led to the error.

The exception must either be prevented, caught within proedure

“SUPPLEMENTALLY_SELECTION” “(FORM)”, or its possible occurrence must be

declared in the

RAISING clause of the procedure.

“SUPPLEMENTALLY_SELECTION” “(FORM)”, or its possible occurrence must be

declared in the

RAISING clause of the procedure.

User and Transaction

Client………….. 900

User……………. “BWREMOTE”

Language Key…….. “E”

Transaction……… ” “

Transactions ID….. “EE9316E1F5C2F10C892B001A643617F0″

Client………….. 900

User……………. “BWREMOTE”

Language Key…….. “E”

Transaction……… ” “

Transactions ID….. “EE9316E1F5C2F10C892B001A643617F0″

Program…………. “SAPLQOWK”

Screen………….. “SAPMSSY1 3004″

Screen Line……… 2

Screen………….. “SAPMSSY1 3004″

Screen Line……… 2

Information on caller of Remote Function Call (RFC):

System………….. “BP1″

Database Release…. 700

Kernel Release…… 700

Connection Type….. 3 (2=R/2, 3=ABAP System, E=Ext., R=Reg. Ext.)

Call Type……….. “asynchron with reply and transactional (emode 0, imode

0)”

Inbound TID……….” “

Inbound Queue Name…” “

Outbound TID………” “

Outbound Queue Name..” “

System………….. “BP1″

Database Release…. 700

Kernel Release…… 700

Connection Type….. 3 (2=R/2, 3=ABAP System, E=Ext., R=Reg. Ext.)

Call Type……….. “asynchron with reply and transactional (emode 0, imode

0)”

Inbound TID……….” “

Inbound Queue Name…” “

Outbound TID………” “

Outbound Queue Name..” “

Client………….. 900

User……………. “BWREMOTE”

Transaction……… ” “

Call Program………”SAPLQOWK”

Function Module….. “QDEST_RUN_DESTINATION”

Call Destination…. “sap-p1-bi-a01_BP1_02″

Source Server……. “sap-p1-bi-a01_BP1_02″

Source IP Address… “172.31.150.15″

User……………. “BWREMOTE”

Transaction……… ” “

Call Program………”SAPLQOWK”

Function Module….. “QDEST_RUN_DESTINATION”

Call Destination…. “sap-p1-bi-a01_BP1_02″

Source Server……. “sap-p1-bi-a01_BP1_02″

Source IP Address… “172.31.150.15″

Additional information on RFC logon:

Trusted Relationship ” “

Logon Return Code… 0

Trusted Return Code. 0

Trusted Relationship ” “

Logon Return Code… 0

Trusted Return Code. 0

Please give me your valuable suggestion and help me to resolve this issue.

SAP Consultant, needs help regarding DBIF_RSQL_SQL_ERROR CX_SY_OPEN_SQL_DB error Help!

http://www.saptechies.com/dbif_rsql_sql_error-sql-error-0-or-11/Set Material Number Display(OMSL) & (RSKC)

In most situations, the extraction in BI failed due to invalid value for InfoObject. For example, I failed to load 0MATERIAL_ATTR because some of the material number is like "[2Q235-A100060D" ( note the '[' character) while '[' is defaultly an invalid character for BI.

Check OSS note #173241 – “Allowed characters in the BW System” ( same as following pic)

If the material number can not have any change on business demand, then we have to include it as permitted characters in BI.

Check OSS note #173241 – “Allowed characters in the BW System” ( same as following pic)

If the material number can not have any change on business demand, then we have to include it as permitted characters in BI.

Step1:

Run TCODE RSKC

Input '[' and execute the program. This will add '[' to the allowed characters list.

Many documents has mentioned parameter 'ALL_CAPITAL'. It is powerful but also a little bit dangerous I think.So here I added only the must-have one.

After this, the extraction should work properly.

Step2:

Since I was loading 0MATERIAL, you can figure that this is a brand new BI system. (If your BI system has runed for sometime already, please ignore this step) So step1 is not enough. Now we encountered another error:

If we open the cube which has 0MATERIAL to check the cube content ( or open InfoObject 0MATERIAL) the following error message occured.

Run TCODE OMSL (or SPRO > IMG button > BW > General settings > Set Material Number Display) both in R/3 and BI because we need to ensure the settings in BI is the samel to that in R/3.

Support Issues

1. tRFC-

7. DSO activation failed due to inconsistent requests in Database tables

Solution : At times, we delete requests from DSoO/PSA/CUBE, but in the backend tables, requests will be still lying.

We have to delete from the database tables

8. Source System was not available

Solution : When BASIS team carries out some maintenance activities, our source system will be under their usage.

Ask them to make it free

9. Delta records missing

Solution : Due to some reasons some records will be missing in delta, then we have to do "Repair Full"

10. Could not find Code page for recieving system

Solution : Again RFC/IDOC problem, Contact BASIS

11. Caller 70 missing

Solution : We have to re- start our data load whenever the source system load is less

12. Timing out of queries

Solution : Check out the Aggregate valuation, If required we have to drop the existing ones, create a new one with High val

Solution: Contact BASIS team and enquiring about RFC, transfer of I-docs Info, We can also check in BD87

2. Delta Corruption

Solution : Some times delta gets corrupted due to some reasons, we have to re-initialize our datasources

3. Extra Charcters

Solution : Maintain ALL_CAPITAL_PLUS_HEX in RSKC, RSALLOWEDCHAR in SE16,

RSKC_ALLOWED_CHAR_MAINTAIN in SE38

4. Transaction Log Full

Solution : Contact BASIS team and ask them to clear the transaction log

5. Wrong Date Format

Solution : We have to identify the erroneous record and edit in PSA and upload again

6. Conversion Exits

Solution : Sometimes we get data into BW in a different format, If possible try to edit in source system and reload again7. DSO activation failed due to inconsistent requests in Database tables

Solution : At times, we delete requests from DSoO/PSA/CUBE, but in the backend tables, requests will be still lying.

We have to delete from the database tables

8. Source System was not available

Solution : When BASIS team carries out some maintenance activities, our source system will be under their usage.

Ask them to make it free

9. Delta records missing

Solution : Due to some reasons some records will be missing in delta, then we have to do "Repair Full"

10. Could not find Code page for recieving system

Solution : Again RFC/IDOC problem, Contact BASIS

11. Caller 70 missing

Solution : We have to re- start our data load whenever the source system load is less

12. Timing out of queries

Solution : Check out the Aggregate valuation, If required we have to drop the existing ones, create a new one with High val

ZDATE_LARGE_TIME_DIFF

Links related support issues:-

http://www.sdn.sap.com/irj/scn/go/portal/prtroot/docs/library/uuid/5085d494-d5e5-2d10-aa82-81b2bd8e611b?QuickLink=index&overridelayout=true

-----------------------------------------------------------------------------------------------------------

http://www.sdn.sap.com/irj/scn/go/portal/prtroot/docs/library/uuid/5e47a690-0201-0010-739f-83431fa63175?QuickLink=index&overridelayout=true

http://www.sdn.sap.com/irj/scn/go/portal/prtroot/docs/library/uuid/5085d494-d5e5-2d10-aa82-81b2bd8e611b?QuickLink=index&overridelayout=true

-----------------------------------------------------------------------------------------------------------

http://www.sdn.sap.com/irj/scn/go/portal/prtroot/docs/library/uuid/5e47a690-0201-0010-739f-83431fa63175?QuickLink=index&overridelayout=true

SAP BI Production Support Issues

Production Support Errors :

1) Invalid characters while loading: When you are loading data then you may get some special characters like @#$%...e.t.c.then BW will throw an error like Invalid characters then you need to go through this RSKC transaction and enter all the Invalid chars and execute. It will store this data in RSALLOWEDCHAR table. Then reload the data. You won't get any error because now these are eligible chars done by RSKC.

2) IDOC Or TRFC Error: We can see the following error at “Status” Screen:Sending packages from OLTP to BW lead to errorsDiagnosisNo IDocs could be sent to the SAP BW using RFC.System responseThere are IDocs in the source system ALE outbox that did not arrive in the ALE inbox of the SAP BW.Further analysis:Check the TRFC log.You can get to this log using the wizard or the menu path "Environment -> Transact. RFC -> In source system".Removing errors:If the TRFC is incorrect, check whether the source system is completely connected to the SAP BW. Check especially the authorizations of the background user in the source system.Action to be taken:If Source System connection is OK Reload the Data.

3)PROCESSING IS OVERDUE FOR PROCESSED IDOCsDiagnosis IDocs were found in the ALE inbox for Source System that is not updated. Processing is overdue. Error correction: Attempt to process the IDocs manually. You can process the IDocs manually using the Wizard or by selecting the IDocs with incorrect status and processing them manually. Analysis:After looking at all the above error messages we find that the IDocs are found in the ALE inbox for Source System that are not Updated.Action to be taken:We can process the IDocs manually via RSMO -> Header Tab -> Click on Process manually.

4) LOCK NOT SET FOR LOADING MASTER DATA ( TEXT / ATTRIBUE / HIERARCHY )Diagnosis User ALEREMOTE is preventing you from loading texts to characteristic 0COSTCENTER . The lock was set by a master data loading process with therequest number. System response For reasons of consistency, the system cannot allow the update to continue, and it has terminated the process. Procedure Wait until the process that is causing the lock is complete. You can call transaction SM12 to display a list of the locks. If a process terminates, the locks that have been set by this process are reset automatically. Analysis:After looking at all the above error messages we find that the user is “Locked”. Action to be taken:Wait for sometime & try reloading the Master Data manually from Info-package at RSA1.

5) Flat File Loading ErrorDetail Error MessageDiagnosis Data records were marked as incorrect in the PSA. System response The data package was not updated.Procedure Correct the incorrect data records in the data package (for example by manually editing them in PSA maintenance). You can find the error message for each record in the PSA by double-clicking on the record status.Analysis:After looking at all the above error messages we find that the PSA contains incorrect record.Action to be taken:To resolve this issue there are two methods:-i) We can rectify the data at the source system & then load the data.ii) We can correct the incorrect record in the PSA & then upload the data into the data target from here.

6) Object requested is currently locked by user ALEREMOTEDetail Error Message.DiagnosisAn error occurred in BI while processing the data. The error is documented in an error message.Object requested is currently locked by user ALEREMOTEProcedureLook in the lock table to establish which user or transaction is using the requested lock (Tools -> Administration -> Monitor -> Lock entries). Analysis:After looking at all the above error messages we find that the Object is “Locked. This must have happened since there might be some other back ground process runningAction to Be taken : Delete the error request. Wait for some time and Repeat the chain.

Idocs between R3 and BW while extraction

1)When BW executes an infopackage for data extraction the BW system sends a Request IDoc ( RSRQST ) to the ALE inbox of the source system.Information bundled in Request IDoc (RSRQST) is :

Request Id ( REQUEST )

Request Date ( REQDATE )

Request Time (REQTIME)

Info-source (ISOURCE)

Update mode (UPDMODE )

2)The source system acknowledges the receipt of this IDoc by sending an Info IDoc (RSINFO) back to BW system.The status is 0 if it is ok or 5 for a failure.

3)Once the source system receives the request IDoc successfully, it processes it according to the information in the request. This request starts the extraction process in the source system (typically a batch job with a naming convention that begins with BI_REQ). The request IDoc status now becomes 53 (application document posted). This status means the system cannot process the IDoc further.

4)The source system confirms the start of the extraction job by the source system to BW by sending another info IDoc (RSINFO) with status = 1

5)Transactional Remote Function Calls (tRFCs) extract and transfer the data to BW in data packages. Another info IDoc (RSINFO) with status = 2 sends information to BW about the data package number and number of records transferred

6)At the conclusion of the data extraction process (i.e., when all the data records are extracted and transferred to BW), an info IDoc (RSINFO) with status = 9 is sent to BW, which confirms the extraction process.

When is reconstruction allowed?

1. When a request is deleted in a ODS/Cube, will it be available under reconstruction.

Ans :Yes it will be available under reconstruction tab, only if the processing is through PSA Note: This function is particularly useful if you are loading deltas, that is, data that you cannot request again from the source system

2. Should the request be turned red before it is deleted from the target so as to enable reconstruction

Ans :To enable reconstruction you may not need to make the request red, but to enable repeat of last delta you have to make the request red before you delete it.

3. If the request is deleted with its status green, does the request get deleted from reconstruction tab too

Ans :No, it wont get deleted from reconstruction tab

4. Does the behaviour of reconstruction and deletion differ when the target is differnet. ODS and Cube

Ans :Yes

How to Debugg Update and transfer Rules

1.Go to the Monitor.

2. Select 'Details' tab.

3. Click the 'Processing'

4. Right click any Data Package.

5. select 'simulate update'

6. Tick the check boxes ' Activate debugging in transfer rules' and 'Activate debugging in update rules'.

7. Click 'Perform simulation'.

Error loading master data - Data record 1 ('AB031005823') : Version 'AB031005823' is not valid

ProblemCreated a flat file datasource for uploading master data.Data loaded fine upto PSA.Once the DTP which runs the transformation is scheduled, its ends up in error as below:

SolutionAfter refering to many links on sdn, i found that since the data is from an external file,the data will not be matching the SAP internal format. So it shud be followed that we mark "External" format option in the datasource ( in this case for Material ) and apply the conversion routine MATN1 as shown in the picture below

:Once the above changes are done, the load was successful.Knowledge from SDN forumsConversion takes place when converting the contents of a screen field from display format to SAP-internal format and vice versa and when outputting with the ABAP statement WRITE, depending on the data type of the field.

Check the info :http://help.sap.com/saphelp_nw04/helpdata/en/2b/e9a20d3347b340946c32331c96a64e/content.htmhttp://help.sap.com/saphelp_nw04/helpdata/en/07/6de91f463a9b47b1fedb5be18699e7/content.htmThis fm ( MATN1) will add leading ZEROS to the material number because when u query on MAKT with MATNR as just 123 you wll not be getting any values, so u should use this conversion exit to add leading zeros.’

Use SE37, to execute the function module RSBM_GUI_CHANGE_USTATE.From the next screen, for I_REQUID enter that request ID and execute.From the next screen, select 'Status Erroneous' radiobutton and continue.This Function Module, change the status of request from Green / Yellow to RED.

What will happend if a request in Green is deleted?

Deleting green request is no harm. if you are loading via psa, you can go to tab 'reconstruction' and select the request and 'insert/reconstruct' to have them back.But,For example you will need to repeat this delta load from the source system. If you delete the green request then you will not get these delta records from the source system.Explanation :when the request is green, the source system gets the message that the data sent was loaded successfully, so the next time the load (delta) is triggered, new records are sent.If for some reason you need to repeat the same delta load from the source, then making the request red sends the message that the load was not successful, so do not discard these delta records.Delta queue in r/3 will keep until the next upload successfully performed in bw. The same records are then extracted into BW in the next requested delta load.

Appearence of Values for charecterstic input help screen

Which settings can I make for the input help and where can I maintain these settings?In general, the following settings are relevant and can be made for the input help for characteristics:Display: Determines the display of the characteristic values with the following options "Key", "Text", "Key and text" and "Text and key".Text type: If there are different text types (short, medium and long text), this determines which text type is to be used to display the text.Attributes: You can determine for the input help which attributes of the characteristic are displayed initially. When you have a large number of attributes for the characteristic, it makes sense to display only a selected number of attributes. You can also determine the display sequence of the attributes.F4 read mode: Determines in which mode the input help obtains its characteristic values. This includes the modes "Values from the master data table (M)", "Values from the InfoProvider (D)" and "Values from the Query Navigation (Q)".

Note that you can set a read mode, on the one hand, for the input help for query execution (for example, in the BEx Analyzer or in the BEX Web) and, on the other hand, for the input help for the query definition (in the BEx Query Designer). You can make these settings in InfoObject maintenance using transaction RSD1 in the context of the characteristic itself, in the InfoProvider-specific characteristic settings using transaction RSDCUBE in the context of the characteristic within an InfoProvider or in the BEx Query Designer in the context of the characteristic within a query. Note that not all the settings can be maintained in all the contexts. The following table shows where certain settings can be made:

Setting RSD1 RSDCUBE BExQueryDesigner

Display X X X

Text type X X X

Attributes X - -

Read mode -

Query execution X X X -

Query definition X - -

Note that the respective input helps in the BEx Web as well as in the BEx Tools enable you to make these settings again after executing the input help.

When do I use the settings from InfoObject maintenance (transaction RSD1) for the characteristic for the input help?

The settings that are made in InfoObject maintenance are active in the context of the characteristic and may be overwritten at higher levels if required. At present, the InfoProvider-specific settings and the BEx Query Designer belong to the higher levels. If the characteristic settings are not explicitly overwritten in the higher levels, the characteristic settings from InfoObject maintenance are active.When do I use the settings from the InfoProvider-specific characteristic settings (transaction RSDCUBE) for the input help?You can make InfoProvider-specific characteristic settings in transaction RSDCUBE -> context menu for a characteristic -> InfoProvider-specific properties.These settings for the characteristic are active in the context of the characteristic within an InfoProvider and may be overwritten in higher levels if required. At present, only the BEx Query Designer belongs to the higher levels. If the characteristic settings are not explicitly overwritten in the higher levels and settings are made in the InfoProvider-specific settings, these are then active. Note that the settings are thus overwritten in InfoObject maintenance.When do I use the settings in the BEx Query Designer for characteristics for the input help?In the BEx Query Designer, you can make the input help-relevant settings when you go to the tab pages "Display" and "Advanced" in the "Properties" area for the characteristic if this is selected.These settings for the characteristic are active in the context of the characteristic within a query and cannot be overwritten in higher levels at present. If the settings are not made explicitly, the settings that are made in the lower levels take effect.

How to supress messages generated by BW Queries

Standard Solution :

You might be aware of a standard solution. In transaction RSRT, select your query and click on the "message" button. Now you can determine which messages for the chosen query are not to be shown to the user in the front-end.

Custom Solution:

Only selected messages can be suppressed using the standard solution. However, there's a clever way you can implement your own solution... and you don't need to modify the system for it!All messages are collected using function RRMS_MESSAGE_HANDLING. So all you have to do is implement an enhancement at the start of this function module. Now it's easy. Code your own logic to check the input parameters like the message class and number and skip the remainder of the processing logic if you don't want this message to show up in the front-end.

FUNCTION rrms_message_handling.

StartENHANCEMENT 1 Z_CHECK_BIA.

* Filter BIA Message

if i_class = 'RSD_TREX' and i_type = 'W' and i_number = '136'*

just testing it.*

exitend if.

ENHANCEMENT

End

IMPORTING

------------

----------

----

EXCEPTIONS

Dummy ..

How can I display attributes for the characteristic in the input help?

Attributes for the characteristic can be displayed in the respective filter dialogs in the BEx Java Web or in the BEx Tools using the settings dialogs for the characteristic. Refer to the related application documentation for more details.In addition, you can determine the initial visibility and the display sequence of the attributes in InfoObject maintenance on the tab page "Attributes" -> "Detail" -> column "Sequence F4". Attributes marked with "0" are not displayed initially in the input help.

Why do the settings for the input help from the BEx Query Designer and from the InfoProvider-specific characteristic settings not take effect on the variable screen?

On the variable screen, you use input helps for selecting characteristic values for variables that are based on characteristics. Since variables from different queries and from potentially different InfoProviders can be merged on the variable screen, you cannot clearly determine which settings should be used from the different queries or InfoProviders. For this reason, you can use only the settings on the variable screen that were made in InfoObject maintenance.

Why do the read mode settings for the characteristic and the provider-specific read mode settings not take effect during the execution of a query in the BEx Analyzer?

The query read mode settings always take effect in the BEx Analyzer during the execution of a query. If no setting was made in the BEx Query Designer, then default read mode Q (query) is used.

How can I change settings for the input help on the variable screen in the BEx Java Web?

In the BEx Java Web, at present, you can make settings for the input help only using InfoObject maintenance. You can no longer change these settings subsequently on the variable screen.

Selective Deletion in Process Chain

The standard procedure :

Use Program RSDRD_DELETE_FACTS

1. Create a variant which is stored in the table RSDRBATCHPARA for the selection to be deleted from a data target.

2. Execute the generated program.

Observations:

The generated program executes will delete the data from data target based on the given selections. The program also removes the variant created for this selective deletion in the RSDRBATCHPARA table. So this generated program wont delete on the second execution.

If we want to use this program for scheduling in the process chain we can comment the step where the program remove the deletion of the generated variant.

Eg:REPORT ZSEL_DELETE_QM_C10 .

TYPE-POOLS: RSDRD, RSDQ, RSSG.

DATA:

L_UID TYPE RSSG_UNI_IDC25,

L_T_MSG TYPE RS_T_MSG,

L_THX_SEL TYPE RSDRD_THX_SEL

L_UID = 'D2OP7A6385IJRCKQCQP6W4CCW'.

IMPORT I_THX_SEL TO L_THX_SEL

FROM DATABASE RSDRBATCHPARA(DE) ID L_UID.

* DELETE FROM DATABASE RSDRBATCHPARA(DE) ID L_UID.CALL FUNCTION 'RSDRD_SEL_DELETION'

EXPORTING

I_DATATARGET = '0QM_C10'

I_THX_SEL =

L_THX_SELI_AUTHORITY_CHECK = 'X'

I_THRESHOLD = '1.0000E-01'

I_MODE = 'C'

I_NO_LOGGING = ''

I_PARALLEL_DEGREE = 1

I_NO_COMMIT = ''

I_WORK_ON_PARTITIONS = ''

I_REBUILD_BIA = ''

I_WRITE_APPLICATION_LOG = 'X'

CHANGING

C_T_MSG =

L_T_MSG.export l_t_msg to memory id sy-repid.

UPDATE RSDRBATCHREP

SET DELETEABLE = 'X'

WHERE REPID = 'ZSEL_DELETE_QM_C10'.

ABAP program to find prev request in cube and delete

There will be cases when we cannot use the SAP built-in settings to delete previous request..The logic to determine previous request may be so customised, a requirement.In such cases you can write a ABAP program which calculates previous request basing our own defined logic.Following are the tables used : RSICCONT ---(list of all requests in any particular cube)RSSELDONE ----- ( has got Reqnumb, source , target , selection infoobject , selections ..etc)Following is one example code. Logic is to select request based on selection conditions used in the infopackage:

TCURF, TCURR and TCURX

TCURF is always used in reference to Exchange rate.( in case of currency translation ).For example, Say we want to convert fig's from FROM curr to TO curr at Daily avg rate (M) and we have an exchange rate as 2,642.34. Factors for this currency combination for M in TCURF are say 100,000:1.Now the effective exchange rate becomes 0.02642.

Question ( taken from sdn ):can't we have an exchange rate of 0.02642 and not at all use the factors from TCURF table?.I suppose we have to still maintain factors as 1:1 in TCURF table if we are using exchange rate as 0.02642. am I right?. But why is this so?. Can't I get rid off TCURF.What is the use of TCURF co-existing with TCURR.Answer :Normally it's used to allow you a greater precision in calaculationsie 0.00011 with no factors gives a different result to0.00111 with factor of 10:1So basing on the above answer, TCURF allows greater precision in calculations.Its factor shud be considered before considering exchange rate

.-------------------------------------------------------------------------------------TCURRTCURR table is generally used while we create currency conversion types.The currency conversion types will refer to the entries in TCURR defined against each currency ( with time reference) and get the exchange rate factor from source currency to target currency.

-------------------------------------------------------------------------------------

TCURXTCURX

table is used to exactly define the correct number of decimal places for any currency. It shows effect in the BEx report output.

-------------------------------------------------------------------------------------

How to define F4 Order Help for infoobject for reporting

Open attributes tab of infoobject definition.In that you will observe column for F4 order help against each attribute of that infoobject like below :

This field defines whether and where the attribute should appear in the value help.Valid values:• 00: The attribute does not appear in the value help.•

01: The attribute appears at the first position (to the left) in the value help.•

02: The attribute appears at the second position in the valuehelp.•

03: ......• Altogether, only 40 fields are permitted in the input help. In addition to the attributes, the characteristic itsel, its texts, and the compounded characteristics are also generated in the input help. The total number of these fields cannot exceed 40.

So accordingly , the inofobjects are changed> Suppose if say for infobject 0vendor, if in case 0country ( which is an attribute of 0vendor) is not be shown in the F4 help of 0vendor , then mark 0 against the attribtue 0country in the infoobject definition of 0vendor.

Dimension Size Vs Fact Size

The current size of all dimensions can be monitored in relation to fact table by t-code se38 running report SAP_INFOCUBE_DESIGNS.Also,we can test the infocube design by RSRV tests.It gives out the dimension to fact ratio.

The ratio of a dimension should be less than 10% of the fact table.In the report,Dimension table looks like /BI[C/O]/D[xxx]

Fact table looks like /BI[C/0]/[E/F][xxx]

Use T-CODE LISTSCHEMA to show the different tables associated with a cube.

When a dimension grows very large in relation to the fact table, db optimizer can't choose efficient path to the data because the guideline of each dimension having less than 10 percent of the fact table's records has been violated.

The condition of having large data growth in a dimension is called degenerative dimension.To fix, move the characteristics to different dimensions. But can only be done when no data in the InfoCube.

Note : In case if you have requirement to include item level details in the cube, then may be the Dim to Fact size will obviously be more which you cant help it.But you can make the item charecterstic to be in a line item dimension in that case.Line item dimension is a dimension having only one charecterstic in it.In this case, Since there is only one charecterstic in the dimension, the fact table entry can directly link with the SID of the charecterstic without using any DIMid (Dimid in dimension table usually connects the SID of the charecterstic with the fact) .Since link happens by ignoring dimension table ( not in real sense ) , this will have faster query performance.

BW Main tables

Extractor related tables: ROOSOURCE - On source system R/3 server, filter by: OBJVERS = 'A'

Data source / DS type / delta type/ extract method (table or function module) / etc

RODELTAM - Delta type lookup table.

ROIDOCPRMS - Control parameters for data transfer from the source system, result of "SBIW - General setting - Maintain Control Parameters for Data Transfer" on OLTP system.

maxsize: Maximum size of a data packet in kilo bytes

STATFRQU: Frequency with which status Idocs are sent

MAXPROCS: Maximum number of parallel processes for data transfer

MAXLINES: Maximum Number of Lines in a DataPacketMAXDPAKS: Maximum Number of Data Packages in a Delta RequestSLOGSYS: Source system.

Query related tables:

RSZELTDIR: filter by: OBJVERS = 'A', DEFTP: REP - query, CKF - Calculated key figureReporting component elements, query, variable, structure, formula, etc

RSZELTTXT: Similar to RSZELTDIR. Texts of reporting component elementsTo get a list of query elements built on that cube:RSZELTXREF: filter by: OBJVERS = 'A', INFOCUBE= [cubename]

To get all queries of a cube:RSRREPDIR: filter by: OBJVERS = 'A', INFOCUBE= [cubename]To get query change status (version, last changed by, owner) of a cube:RSZCOMPDIR: OBJVERS = 'A' .

Workbooks related tables:

RSRWBINDEX List of binary large objects (Excel workbooks)

RSRWBINDEXT Titles of binary objects (Excel workbooks)

RSRWBSTORE Storage for binary large objects (Excel workbooks)

RSRWBTEMPLATE Assignment of Excel workbooks as personal templatesRSRWORKBOOK 'Where-used list' for reports in workbooks.

Web templates tables:

RSZWOBJ Storage of the Web Objects

RSZWOBJTXT Texts for Templates/Items/Views

RSZWOBJXREF Structure of the BW Objects in a TemplateRSZWTEMPLATE Header Table for BW HTML Templates.

Data target loading/status tables:

rsreqdone, " Request-Data

rsseldone, " Selection for current Request

rsiccont, " Request posted to which InfoCube

rsdcube, " Directory of InfoCubes / InfoProvider

rsdcubet, " Texts for the InfoCubes

rsmonfact, " Fact table monitor

rsdodso, " Directory of all ODS Objects

rsdodsot, " Texts of ODS Objectssscrfields. " Fields on selection screens

Tables holding charactoristics:

RSDCHABAS: fields

OBJVERS -> A = active; M=modified; D=delivered

(business content characteristics that have only D version and no A version means not activated yet)TXTTABFL -> = x -> has text

ATTRIBFL -> = x -> has attribute

RODCHABAS: with fields TXTSHFL,TXTMDFL,TXTLGFL,ATTRIBFL

RSREQICODS. requests in ods

RSMONICTAB: all requestsTransfer Structures live in PSAPODSD

/BIC/B0000174000 Trannsfer Structure

Master Data lives in PSAPSTABD

/BIC/HXXXXXXX Hierarchy:XXXXXXXX

/BIC/IXXXXXXX SID Structure of hierarchies:

/BIC/JXXXXXXX Hierarchy intervals

/BIC/KXXXXXXX Conversion of hierarchy nodes - SID:

/BIC/PXXXXXXX Master data (time-independent):

/BIC/SXXXXXXX Master data IDs:

/BIC/TXXXXXXX Texts: Char./BIC/XXXXXXXX Attribute SID table:

Master Data views

/BIC/MXXXXXXX master data tables:

/BIC/RXXXXXXX View SIDs and values:

/BIC/ZXXXXXXX View hierarchy SIDs and nodes:InfoCube Names in PSAPDIMD

/BIC/Dcube_name1 Dimension 1....../BIC/Dcube_nameA Dimension 10

/BIC/Dcube_nameB Dimension 11

/BIC/Dcube_nameC Dimension 12

/BIC/Dcube_nameD Dimension 13

/BIC/Dcube_nameP Data Packet

/BIC/Dcube_nameT Time/BIC/Dcube_nameU Unit

PSAPFACTD

/BIC/Ecube_name Fact Table (inactive)/BIC/Fcube_name Fact table (active)

ODS Table names (PSAPODSD)

BW3.5/BIC/AXXXXXXX00 ODS object XXXXXXX : Actve records

/BIC/AXXXXXXX40 ODS object XXXXXXX : New records

/BIC/AXXXXXXX50 ODS object XXXXXXX : Change log

Previously:

/BIC/AXXXXXXX00 ODS object XXXXXXX : Actve records

/BIC/AXXXXXXX10 ODS object XXXXXXX : New records

T-code tables:

tstc -- table of transaction code, text and program name

tstct - t-code text .

1What is tickets? And example?

The typical tickets in a production Support work could be:

1. Loading any of the missing master data attributes/texts.

2. Create ADHOC hierarchies.

3. Validating the data in Cubes/ODS.

4. If any of the loads runs into errors then resolve it.

5. Add/remove fields in any of the master data/ODS/Cube.

6. Data source Enhancement.

7. Create ADHOC reports.

1. Loading any of the missing master data attributes/texts - This would be done by scheduling the info packages for the attributes/texts mentioned by the client.

2. Create ADHOC hierarchies. - Create hierarchies in RSA1 for the info-object.

3. Validating the data in Cubes/ODS. - By using the Validation reports or by comparing BW data with R/3.

4. If any of the loads runs into errors then resolve it. - Analyze the error and take suitable action.

5. Add/remove fields in any of the master data/ODS/Cube. - Depends upon the requirement

6. Data source Enhancement.

7. Create ADHOC reports. - Create some new reports based on the requirement of client.

Tickets are the tracking tool by which the user will track the work which we do. It can be a change requests or data loads or whatever. They will of types critical or moderate. Critical can be (Need to solve in 1 day or half a day) depends on the client. After solving the ticket will be closed by informing the client that the issue is solved. Tickets are raised at the time of support project these may be any issues, problems.....etc. If the support person faces any issues then he will ask/request to operator to raise a ticket. Operator will raise a ticket and assign it to the respective person. Critical means it is most complicated issues ....depends how you measure this...hope it helps. The concept of Ticket varies from contract to contract in between companies. Generally Ticket raised by the client can be considered based on the priority. Like High Priority, Low priority and so on. If a ticket is of high priority it has to be resolved ASAP. If the ticket is of low priority it must be considered only after attending to high priority tickets.

Checklists for a support project of BPS - To start the checklist:

1) Info Cubes / ODS / data targets 2) planning areas 3) planning levels 4) planning packages 5) planning functions 6) planning layouts 7) global planning sequences 8) profiles 9) list of reports 10) process chains 11) enhancements in update routines 12) any ABAP programs to be run and their logic 13) major bps dev issues 14) major bps production support issues and resolution .

2 What are the tools to download tickets from client? Are there any standard tools or it depends upon company or client...?

Yes there are some tools for that. We use Hpopenview. Depends on client what they use. You are right. There are so many tools available and as you said some clients will develop their own tools using JAVA, ASP and other software. Some clients use just Lotus Notes. Generally 'Vantive' is used for tracking user requests and tickets.

It has a vantive ticket ID, field for description of problem, severity for the business, priority for the user, group assigned etc.

Different technical groups will have different group ID's.

User talks to Level 1 helpdesk and they raise ticket.

If they can solve issue for the issue, fine...else helpdesk assigns ticket to the Level 2 technical group.

Ticket status keeps changing from open, working, resolved, on hold, back from hold, closed etc. The way we handle the tickets vary depending on the client. Some companies use SAP CS to handle the tickets; we have been using Vantage to handle the tickets. The ticket is handled with a change request, when you get the ticket you will have the priority level with which it is to be handled. It comes with a ticket id and all. It's totally a client specific tool. The common features here can be - A ticket Id, - Priority, - Consultant ID/Name, - User ID/Name, - Date of Post, - Resolving Time etc.

There ideally is also a knowledge repository to search for a similar problem and solutions given if it had occurred earlier. You can also have training manuals (with screen shots) for simple transactions like viewing a query, saving a workbook etc so that such queried can be addressed by using them.

When the problem is logged on to you as a consultant, you need to analyze the problem, check if you have a similar problem occurred earlier and use ready solutions, find out the exact server on which this has occurred etc.

You have to solve the problem (assuming you will have access to the dev system) and post the solution and ask the user to test after the preliminary testing from your side. Get it transported to production once tested and posts it as closed i.e. the ticket has to be closed.

3.What is User Authorizations in SAP BW?

Authorizations are very important, for example you don't want the important financial report to all the users. so, you can have authorization in Object level if you want to keep the authorization for specific in object for this you have to check the Object as an authorization relevant in RSD1 and RSSM tcodes. Similarly you set up the authorization for certain users by giving that users certain auth. in PFCG tcode. Similarly you create a role and include the tcodes; BEx reports etc into the role and assign this role to the userid.

Need help on sap bi 3.5 to 7.0 migration.

ReplyDeleteGreat ......Superb note......Thank u

ReplyDelete

ReplyDeletevery useful information thanks for sharing thank you